LSTM cell

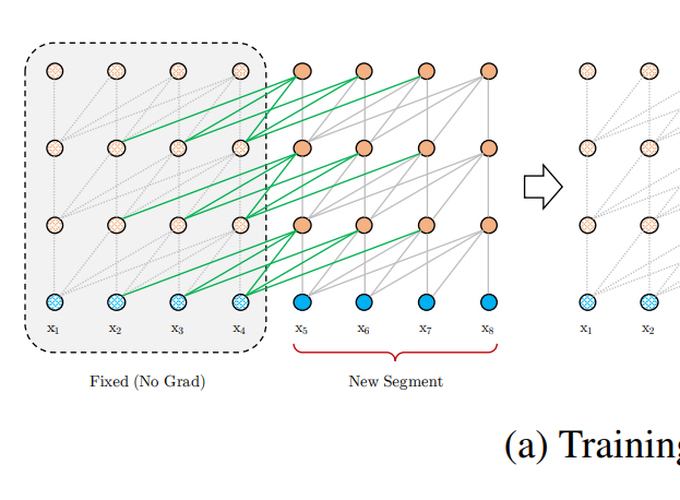

Transforme-XL

LSTM cell

LSTM cell

Transforme-XL

Abstract

This talk summarizes the paper Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context. It assumes that audience are already familier with Attention Is All You Need paper and also discuss some high level concepts of it.

Date

Jul 5, 2019

3:30 PM

— 5:30 PM

Event

Location

MICL Lab, Singapore